Tags

AI, app, artificial, artificial-intelligence, chat, chatgpt, experiment, generation, image, images, insidious, intelligence, LLM, technology, worthless, writing

Reluctantly, I paid for ChatGPT for three months in order to evaluate engagement with what we call chatbots, or responsive Artificial Intelligence (AI). It was disturbing and I want to share my experience as a snapshot of the state of affairs today.

These interactive AI are based on the LLM or “large language model” – a model trained with machine learning, on an immense amount of text.

The largest and most capable models, designed for natural language processing, especially language generation, provide the core capabilities of chatbots and are called Generative Pre-trained Transformers or GPTs – hence ChatGPT.

Back in 2008, Hollywood made having a hyper-advanced AI you talk to feel sexy, through Robert Downey, Jr.’s Tony Stark, Iron Man.

First, his comprehensively integrated AI, named “Jarvis,” voiced by Paul Bettany, was the ultimate English butler. Tony Stark could make hip, insanely complex requests of his AI, and Jarvis accomplished them in real-time with dry wit intact.

By 2012 in the MCU, Jarvis was replaced with “F.R.I.D.A.Y.,” an acronym Marvel says means “Female Replacement Intelligent Digital Assistant Youth” but is a tongue-in-cheek reference to the 20th century, post-war term for a secretary or personal assistant, “Girl Friday” or “Gal Friday,” which in itself was a gender switch from the character of Friday, in the novel Robinson Crusoe (1719) by Daniel Defoe.

In the novel, Crusoe names the islander he meets ‘Friday’ because that was the day they met. Crusoe refers to him as his “man Friday.”

By extension, the term “girl Friday” became used for a female. The OED considers “girl Friday,” dated today. Still, it defines it as: “a female assistant, especially a junior office worker or a personal assistant to a business executive.”

Kerry Condon, a talented Irish actor, with an excellent professional voice, trained at the Royal Shakespeare Academy, voiced F.R.I.D.A.Y. Yet they still effected her sound with heavy vocal fry to make it sexier.

The following year an entire feature film, Her, starring Joaquin Phoenix and directed by Spike Jonze, was dedicated to a man having an intimate relationship with his AI chatbot, voiced by Scarlett Johansson.

The robotic, unemotional tone of Siri and the Googlemaps vox was beginning to disappear. Seductive voices were marketed heavily.

By 2021 in the Marvel Universe, Spider Man required a hip, tech-savvy teenaged relationship with A.I.

High schooler Peter Parker’s AI, named Karen and voiced by Jennifer Connelly, was a chatty, personal teacher and friend, “like a big sister, but you know not your sister,” they might have pitched. Karen was designed expressly to correspond to young people nationwide being exposed to their own chatbots in real life.

So for thirteen years, from 2008 to 2021, Hollywood crafted voices for artificial intelligence, until real-world AI caught up to being used widely by everyone. We were being taught how to talk to our machines.

Yeah, I’m not talking to it.

I’ve avoided using

“Siri” (Apple, 2012),

“Alexa” (Amazon, 2014) ,

or the more recent “Gemini” (Google, 2023).

These are all voice-activated chatbots responsive to their names, or a name you give them. Here was the original ad for Siri:

Ten years later, since 2021, during the high-profile commercials of the Super Bowl and Xmas, across every platform of gaming and streaming, ads like this one, featuring people using chatbots – and so explaining how to use them – have made these AI far more common.

By January of 2022, my friend Tom – a consistently early adopter for the 30 years I’ve known him – talked to Alexa through his house and car regularly. Between ’22 and today, use of interactive, voice-activated and responsive artificial intelligence, or chatbots, has increased and diversified. It’s popular now.

The ask is known as a prompt.

Because I can’t bring myself to talk to it or give it a name, the extent of my first interactions with ChatGPT were text-based even if they concerned images.

This summer, on a trip to Indonesia, I visited Bali Bird Park, where I saw many exotic birds only within their large, caged enclosures. I decided my first ask of ChatGPT would be to remove the cage bars that were in my photos.

The prompt was: “remove the bars from in front of the bird(s)”

This is the Eclectus Parrot.

or is it?

What do you see? Is the parrot behind the bars the same? Take a look at the feathers, the talons.

At the heart of the problem with the popularization of AI-generated images is a degree of approximation the machine does that goes unnoticed.

This withers the attention of the viewer and weakens their powers of observation. They think: “Sure, that’s good enough – now you can see the parrot.” But they don’t notice the photographed bird has been changed.

In the same way that compression drove us to trade high fidelity audio for the ease of owning, playing and manipulating music, these AI-generated images weaken our perception. An assault on the ears first, and now the eyes.

My second prompt was to remove the bars from a cage in which there was a more unusual bird. The Sulawesi Hornbill has a fantastic appearance. I photographed this one that was just hopping around outside the cages, so there were no bars to be removed:

Below its beak, there is an area of knobbed or wrinkled skin and feathers that gives it a larger appearance – a false mouth lower on its body. It is an unusual creature. What would AI make of it?

Sigh. If you just glanced at it, look at it again! It’s a different thing.

There are details that are just completely wrong. If I were to share the photo on social media without the bars and say, “hey everyone look at these Sulawesi Hornbill I photographed in Bali!” It would be a lie.

This shocked and saddened me. Because I know most people won’t look closely enough. They won’t carefully discriminate.

Already, because I quit social media in 2021, I notice the WRONG quick-takes and mistakes based on these small but not insignificant errors made by AI more than most.

We must train ourselves to be even more vigilant.

Next I decided to use AI for something based more in contemporary pop-culture. A 2025 television show called Alien: Earth, introduced a creature known as t. ocellus – a sort of octopus eyeball capable of parasitically occupying other creatures by replacing their eyeball with its own and manipulating the body it occupies. It was a fantastic addition to the science fiction world of the Alien franchise, and fictional creatures in general. I drew it freehand for fun.

In the TV show, the idea was introduced but not shown that the ocellus could occupy the body of the larger, more powerful creature from the Alien franchise known as the Xenomorph. I think everybody who saw the show wondered what that would be like. The small powerful eyeball creature inside the head of a xenomorph was an image instantly awakened in the imagination, though it was never presented.

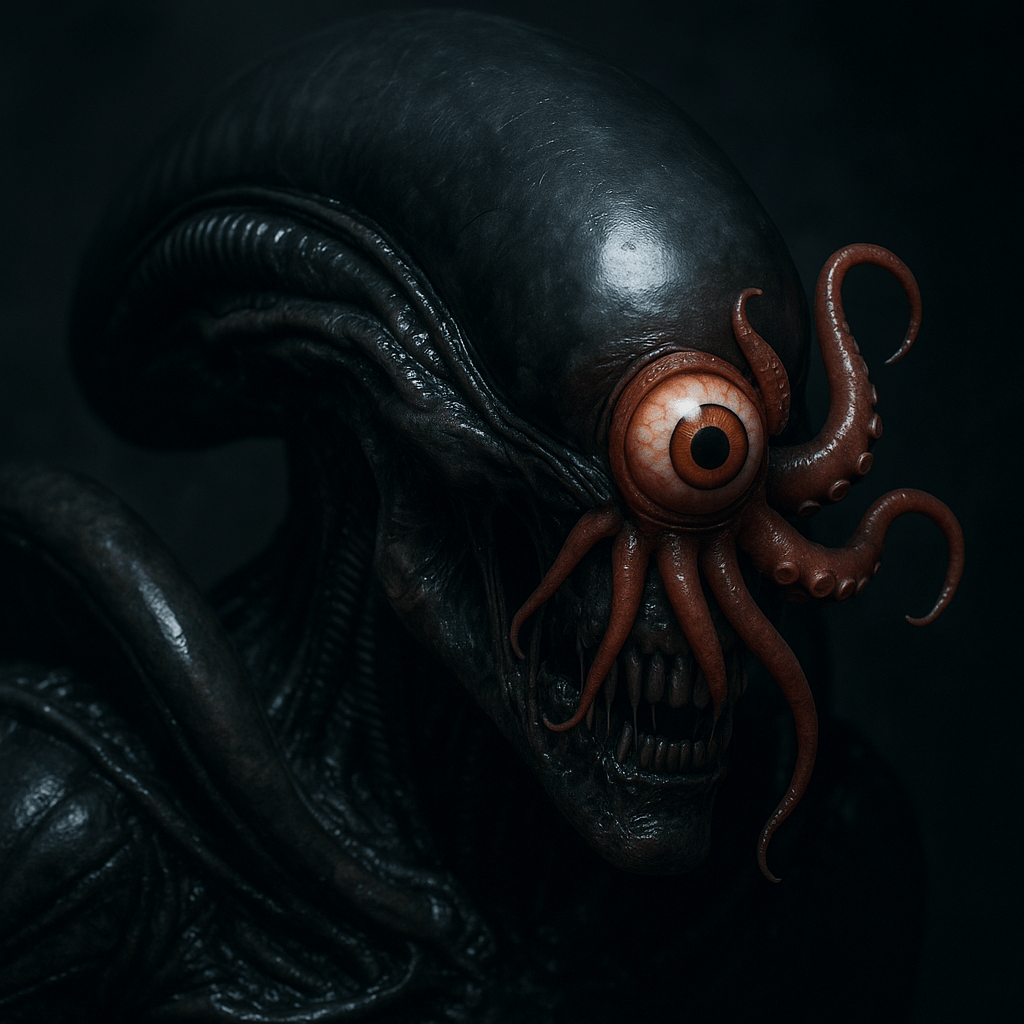

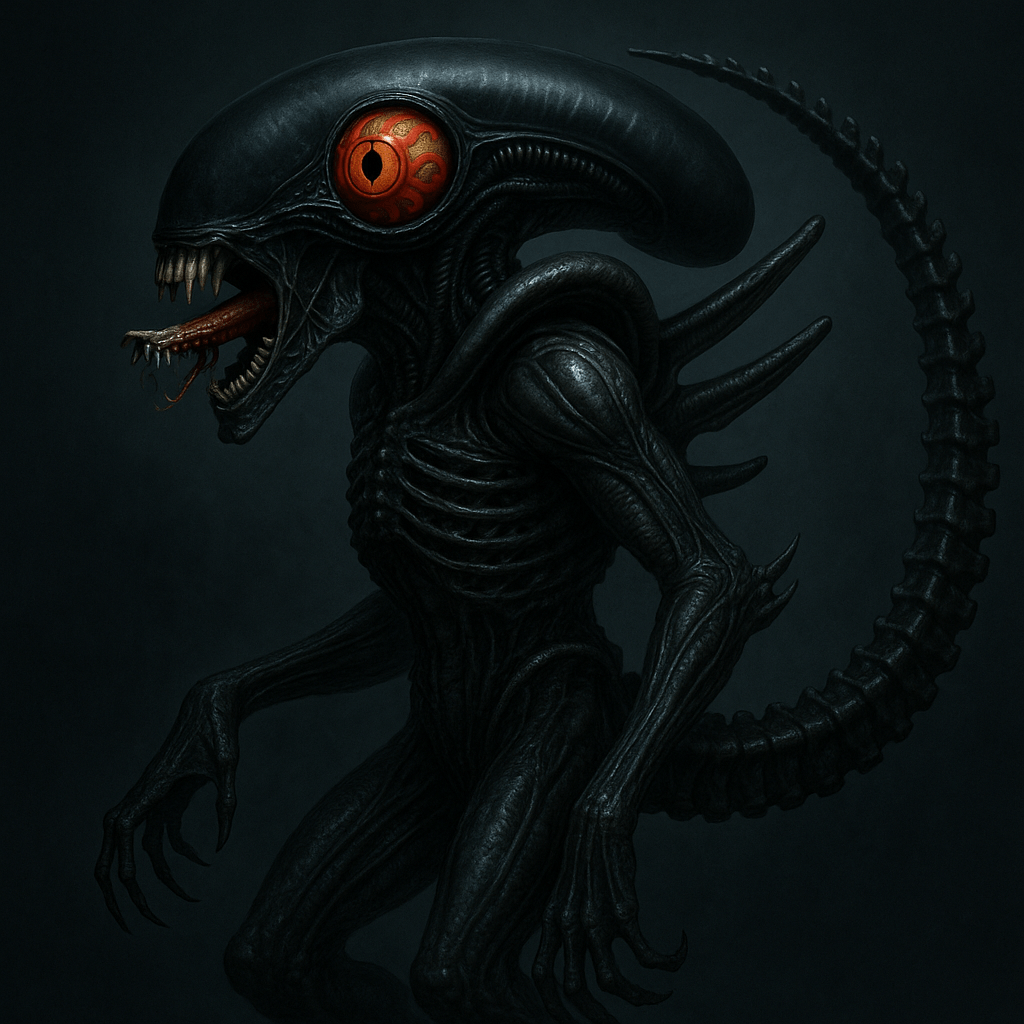

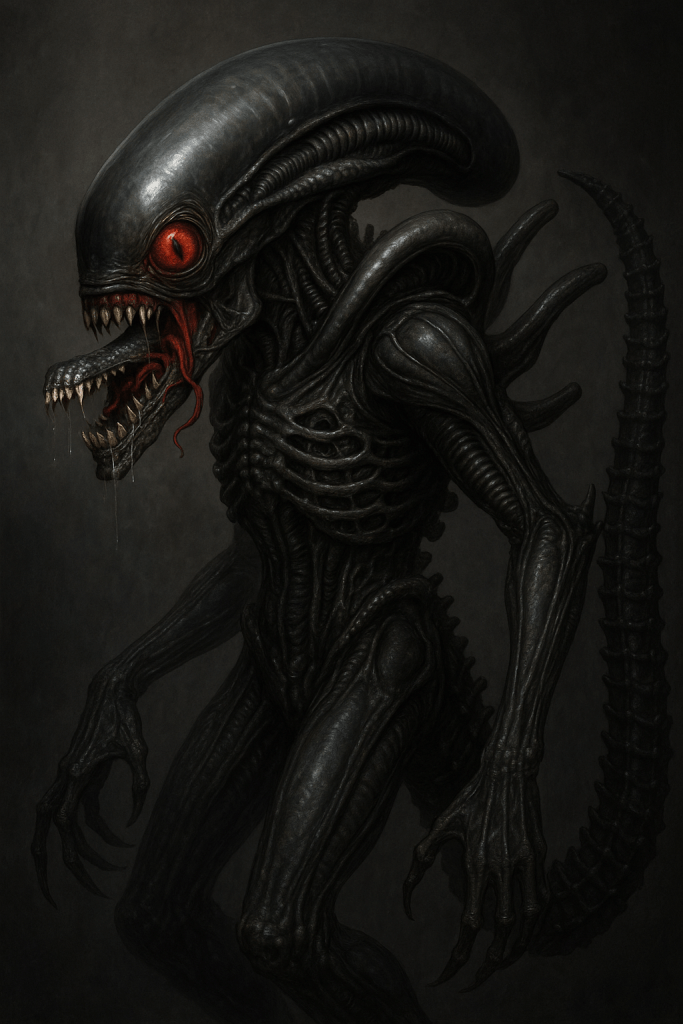

So I set ChatGPT to the task. I asked it to make the Xenomorph from the Alien franchise as if it had been occupied by the t. ocellus. ChatGPT would be pulling its awareness of both the Xenomorph and the ocellus from general information available culturally because of the new TV show. It was fresh, and yet had an older pop cultural aspect – the Alien franchise has been around for forty years. ChatGPT started out cartoonish:

so I asked it to be more filmic:

But the single eye in the middle of the head was wrong … so I directed it to make the Xenomorph in profile. It made several as I tweaked it, and you can see below that the internal mouth was in some cases wrong because ChatGPT couldn’t distinguish two jaws. But this one is perhaps best:

It is an interesting exercise in iterations. I began to see that even if I didn’t ask ChatGPT to make certain specific changes between iterations, it would make some changes anyway – in pursuit of greater verisimilitude. Still it would equally fail in some other way. It was like, if I could just get an amalgamation of several of the images it made to come up with all the correct features in one image, we’d be okay. Troublesome.

I decided to use myself as a model for my next request:

My prompt was: “create a loteria card called “the Writist” with this image.

It caught my wonky eye! I tuned the prompt.

I said, “make the card number 47 and make the image more realistic.”

It was then I showed it to my friend Sofia, who commented, “Well it should be ‘El’ not ‘The'” – which is correct. So I asked ChatGPT to change it. I also took the time to ask ChatGPT to make the cigarette into a joint.

Why did it suddenly add the strip of blue color when I changed it to “El”? Was it pursuing a more “Loteria card” feel?

And why did it return to a more generic and comic book look from the more realistic?

It thinks “joint” means a conical spliff from Amsterdam.

I was ready to quit using ChatGPT. It left a terrible taste in my mouth and whatever the corresponding terms for negative feelings in my eyes are.

But the World Series was about to start and I had been telling people that the Dodgers have a Three-Headed Japanese Hydra. By this I meant they have three Japanese players: Shohei Ohtani, Yoshinobu Yamamoto and Roki Sasaki, who are formidable.

Would ChatGPT know who these Japanese players were? What they look like? The World Series was as fresh as Alien: Earth in terms of current volume of discourse.

So I asked ChatGPT to make a Three-headed Hydra emerging from the sea with the heads of Shohei Ohtani, Yoshinobu Yamamoto and Roki Sasaki:

Definitely NOT the three gentleman in question. Ohtani maybe. So I asked it to do the same with the singers Toni, Tony Toné:

It got crazy. And sort of racist.

Maybe it exposed something about the race of ChatGPT’s learning model and its view of the world.

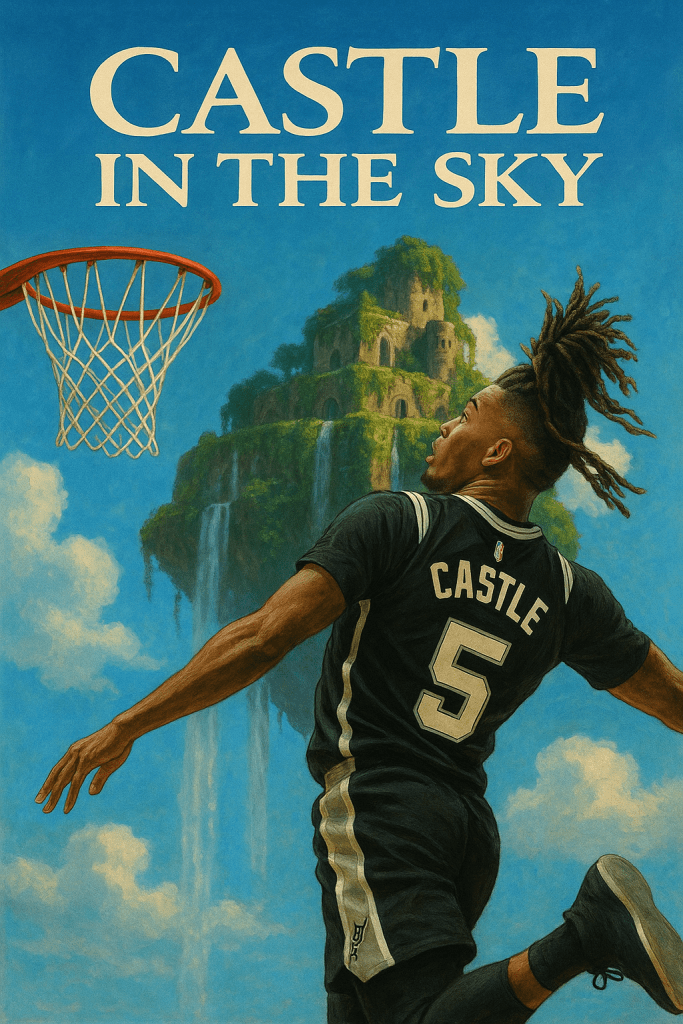

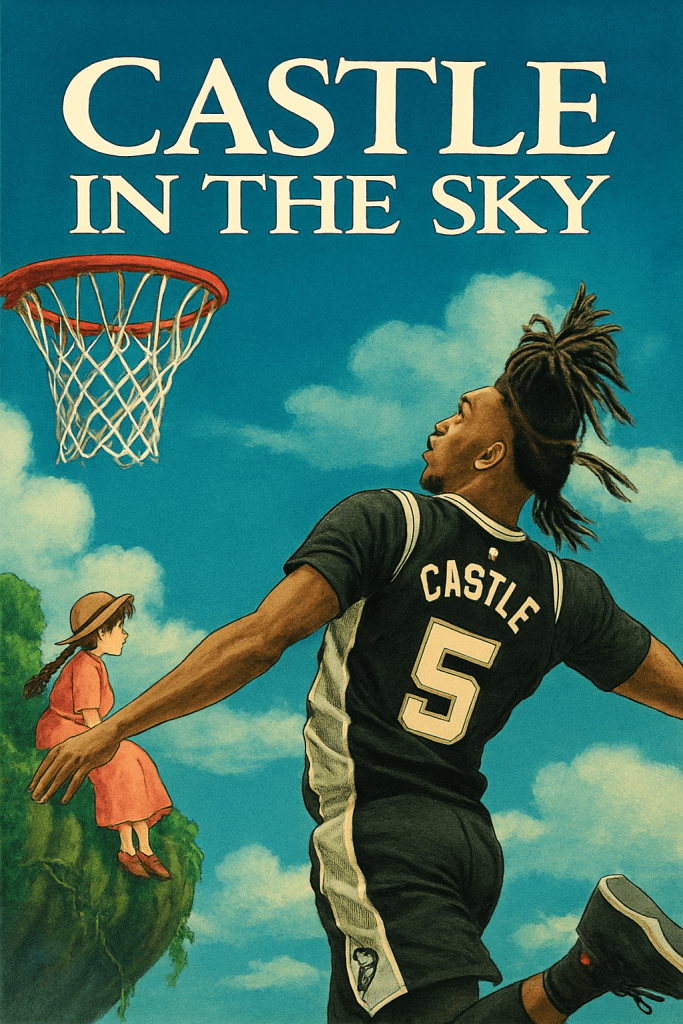

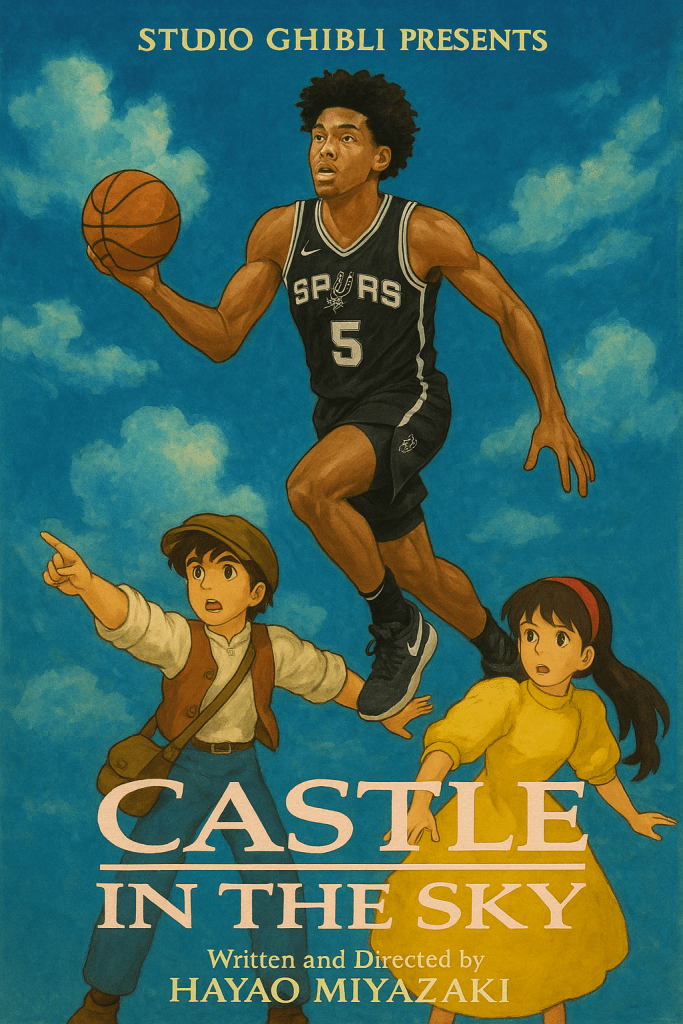

Finally I asked ChatGPT to make an image using the movie poster from Miyazaki Hayao’s animated feature film, Castle in the Sky, and feature instead a picture of Spurs guard Stefon Castle, flying in the air for a dunk.

This was as close as we got:

The profile is just … wrong. The ear is wrong. The face.

There were some pretty bad ones. Unlike the random Black and Japanese faces on the Hydra it pulled from its awareness, this was ChatGPT approximating a person from a photograph I uploaded. Like the image I shot of myself for the Loteria card

It kind of looks like me. But not exactly. That kind of looks like Stefon Castle.

And this one definitely doesn’t.

Doesn’t look like him.

I bet if you asked it for DJT it would.

** UPDATE and CONCLUSION **

I ran the Chat GPT AI product into Google AI animation and this is the final piece of that project – from staged photo behind Silver Spur through ChatGPT and Google AI.